Untangling the Knots of Distributed Computing: An Exhaustive Investigation

UncategorizedIntroduction

In the broad landscape of modern computing, the phrase “distributed computing” has become more than just a buzzword; it is a paradigm that is profoundly transforming the way that we tackle difficult computational issues. Distributed computing has become more than just a buzzword in modern computing. Although distributed computing is not a brand-new idea, its significance has recently catapulted to the forefront of technological innovation as a result of the rise of cloud computing, edge computing, and the continuously increasing demand for processing capacity.

Defining What Is Meant by Distributed Computing

Distributed computing, at its most fundamental level, refers to the practice of putting together a group effort on a single project by utilizing a number of computers that are networked together. dispersed computing systems, in contrast to traditional computing models in which all calculations are carried out by a single machine, break down complicated jobs into smaller, more manageable subtasks that are then dispersed throughout a network of computers. This method has a number of benefits, such as increased performance, fault tolerance, and scalability.

The Progress of Distributed Computing throughout Time

The beginnings of computer networks are where the principles that underpin distributed computing may be found. However, major traction wasn’t acquired by the notion until the latter half of the 20th century. The development of distributed computing frameworks was fuelled by the emergence of the internet as well as the desire for more reliable and scalable systems.

The development of grid computing, which attempted to harness the collective power of geographically scattered resources for the purposes of scientific and research endeavors, is considered to be one of the most significant milestones in this progression. Computing on a grid set the groundwork for following advances such as computing in clusters and in the cloud.

Identifying Features of a Distributed Computer System

1. Concurrent Processing and Parallelism

Tasks are broken up into more manageable chunks in a distributed system so that several users may work on them at the same time. This parallelism is the foundation of distributed computing, which makes it possible to complete tasks more quickly and make better use of available resources. On the other hand, controlling concurrent operations might provide difficulties in synchronization and coordination.

2. The ability to tolerate errors:

Distributed systems are naturally more resistant to breakdowns due to the fact that they are decentralized in nature. In the event that one of the nodes in the network fails, the system is still able to function properly thanks to the other nodes. The implementation of systems for error detection, recovery, and redundancy are required in order to achieve fault tolerance.

3. Scalability:

The capability to expand in a horizontal direction is one of the key drivers behind the adoption of distributed computing. When there is a higher demand for computation, businesses have the ability to enhance their processing capacity by adding additional nodes to the network. This scalability is essential for supporting applications that have fluctuating workloads and managing enormous databases.

4. Decentralized control:

In contrast to centralized systems, in which a single entity is in charge of all of the resources, distributed systems split up control and decision-making authority across a number of different nodes. Decentralization makes systems more adaptable and flexible, which in turn makes them more robust and responsive to a variety of changing situations.

5. The Protocols of Communication:

In distributed computing, having good communication between the nodes is absolutely necessary. The transfer of data between nodes is facilitated by a variety of communication protocols, examples of which are Message Passing Interface (MPI) and Remote Procedure Call (RPC). The particular necessities of the distributed application should guide one’s selection of the appropriate protocol.

The term “distributed computing” refers to a range of computer system models and architectures, each of which is designed to meet a particular set of criteria and overcome a unique set of obstacles. The ability to understand these concepts is necessary for the successful design of distributed systems.

The Client-Server Model

The client-server approach is an important part of the infrastructure that underpins distributed computing. Within the confines of this paradigm, one or more client nodes will send requests to a centralized server, which will then provide a response to those requests. Applications like as web servers, in which clients (web browsers) communicate with a central server to receive web pages or data, typically make use of this architecture.

2. Peer-to-Peer (P2P) Networks, Also Known As:

In contrast to the client-server architecture, peer-to-peer networks decentralize the processing and data storage capabilities among all of the nodes in the network. Each node is capable of performing the functions of both a client and a server, enabling them to work together with other nodes toward the accomplishment of a shared objective. P2P networks are well-known for having a decentralized structure, and file-sharing software frequently makes use of these networks.

3. Computing in Clusters :

In cluster computing, numerous computers are connected to one another so that they can function as a single unified system. These clusters may be utilized for parallel processing, with each node being responsible for a fraction of the overall burden. Clusters of high-performance computers, often known as HPCs, are increasingly used in the conduct of research and simulations in the scientific community.

4. The use of Grid Computing:

The concept of cluster computing is expanded with grid computing, which connects several clusters located in different parts of the world into a single operating system. This strategy is particularly useful for doing data-intensive projects of a big size that call for collaboration amongst a number of distinct businesses or academic institutes. Grid computing was an essential component in the forward movement of scientific investigation in a variety of subfields, including genomics and physics.

5. The Internet in the Cloud:

The concept of “the cloud” as it relates to computers has been increasingly prevalent during the past several years. Cloud services allow users to have on-demand access to a pool of computer resources, which may include storage, processing power, or networking capabilities. Users are only charged for the resources that they really use, allowing them to grow their infrastructure to meet any requirements. The panorama of information technology now includes major cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

The nature of the application, the need for scalability, and the need of fault-tolerance all play a role in determining which distributed computing model should be used. Each model has advantages and disadvantages that must be weighed before making a decision.

The Obstacles Faced by Distributed Computing

Although there are significant benefits to using distributed computing, there are also a number of obstacles that come along with it. In order to effectively manage distributed systems, it is necessary to handle challenges relating to communication, data consistency, fault tolerance, and security.

1. Obstacles

Presented by Communication In order to effectively coordinate operations among dispersed nodes, there must be enough communication. Latency, bandwidth constraints, and the possibility of network outages are all aspects that need to be taken into consideration. The use of sophisticated communication protocols and algorithms is implemented in order to maximize the efficiency of data flow and reduce the amount of time wasted waiting.

2. Uniformity and Replicate Results:

Keeping data in a remote database or storage system consistent may be a difficult and time-consuming process. When data is replicated over numerous nodes, there is an increased risk that not all of the copies will be in sync with one another. There are a variety of trade-offs that may be made between performance and data integrity that can be achieved using consistency models such as eventual consistency and strong consistency.

3. Fault Tolerance and Reliability:

Distributed systems must be built to be able to resist the failure of individual nodes, as well as partitions in the network and any other unanticipated problems. When it comes to assuring the dependability of distributed systems, one of the most important things to do is include fault-tolerant methods like replication, load balancing, and automated recovery.

4. Security Concerns:

Due to the decentralized structure of distributed systems, it leaves them open to a variety of security risks, including unauthorized access, data breaches, and distributed denial-of-service (DDoS) assaults. The design of a distributed system must always include crucial elements such as strong security features such as encryption, authentication, and access limits.

Distributed computing has moved beyond the confines of theoretical frameworks to become an essential component of the technical fabric of a wide variety of business sectors. In this part, we will examine real-world applications, case studies, and emerging trends that demonstrate the revolutionary power of distributed systems.

1. Internet of Things (also known as IoT)

The rapid growth of IoT-connected gadgets has stoked consumer interest in distributed computing solutions. IoT devices create huge volumes of data that need to be processed and analyzed effectively. These devices may be found in applications ranging from smart homes to industrial settings. Edge computing is a distributed computing paradigm that brings computation closer to the data source. It has emerged as a vital component in the handling of the real-time processing demands of Internet of Things (IoT) ecosystems.

2. Analytical Methods for Big Data:

When it comes to the analysis of large data, distributed computing is an extremely important factor. Distributed processing of huge datasets is made possible by technologies such as Apache Hadoop and Apache Spark, which run on clusters of computers. This strategy enables businesses to mine vast volumes of data for actionable insights, which in turn makes it easier for them to make educated decisions and do predictive analytics.

3. Container Orchestration, Also Known As

The increasing popularity of containerization, which is demonstrated by platforms such as Docker and Kubernetes, has resulted in a transformation in the manner in which applications are deployed and managed. To coordinate the deployment, scaling, and monitoring of containerized applications, container orchestration in particular is dependent on distributed systems. The flexibility, scalability, and efficient use of resources are all improved by this technique.

4. Technology Based on the Blockchain:

Applications outside of finance are possible with blockchain, the decentralized and distributed ledger technology that underpins cryptocurrencies. Investigations are being conducted on its use in a variety of fields, including healthcare, identity verification, and management of supply chains. The security and transparency of blockchain systems are bolstered by distributed consensus methods and decentralized validation processes, respectively.

5. Computing Without a Server:

Serverless computing , also known as Function as a Service (FaaS), allows developers to focus only on developing code since it isolates the infrastructure layer from their view. Serverless platforms work in the background to share the task of executing operations over a network of different servers. This strategy improves efficiency in the use of available resources and offers automated scalability, two features that make it an appealing alternative for specific categories of applications.

New Patterns And Trends In:

1. Developments in Front-End Computing:

Edge computing is evolving to address the rising demand for low-latency processing as IoT devices continue to spread. This demand is being driven by the proliferation of IoT devices. New tendencies include the implementation of artificial intelligence (AI) at the edge as well as the creation of apps that are native to the edge.

2. Hybrid Cloud Architectures, Also Known As:

On-premises infrastructure and cloud services are increasingly being combined in hybrid cloud designs, which are gaining popularity among businesses. This strategy makes use of the ideas of distributed computing in order to integrate and manage workloads in a seamless manner across a variety of settings.

3. The field of quantum computing:

Quantum computing, despite the fact that it is still in its infant stages, carries the potential of radically altering the capabilities of computers. The goal of quantum distributed systems is to solve complicated problems at a rate that is exponentially quicker than can be accomplished by conventional computers.

Final Thoughts

Computing that is distributed has emerged as a central pillar of innovation, which is driving forward progress in a variety of fields. Distributed systems have had a significant influence on a variety of domains, including the Internet of Things (IoT) ecosystems, where they have improved data processing, and block chain, where they have revolutionized financial transactions. Part 4 will investigate the future trajectory of this paradigm, including possible hurdles, ethical issues, and the role that artificial intelligence will play in defining the next generation of distributed systems, as we traverse the environment of distributed computing, which is always shifting and developing. Come along with us as we conclude our in-depth look into distributed computing by unraveling the last few loose ends.

Related Posts

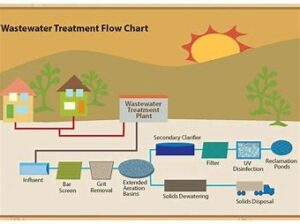

Safeguarding Tomorrow’s Liquid Gold: A Comprehensive Exploration of Wastewater Treatment and Water Supply Systems

Introduction: In the pursuit of sustainable development, one of the most critical challenges we face is the effective management of water resources. As global populations burgeon and industrialization advances, the demand for water escalates, while the supply dwindles. In this

Revolutionizing Quantum Computing – Geeta University

Revolutionizing Quantum Computing – Geeta University Quantum computing is a rapidly developing field that has the potential to revolutionize computing as we know it. Traditional computers operate on bits, which can only be in one of two states: 0 or

The Impact of Climate Change on Civil Infrastructure Design

A Blog by Sachin Bhardwaj A.P SOE Climate change is no longer a distant concern; it is a present-day reality. As global temperatures rise and weather patterns become more extreme, the built environment must adapt to survive. Civil infrastructure, which