Human-in-the-Loop Machine Learning Systems

Uncategorized

Introduction

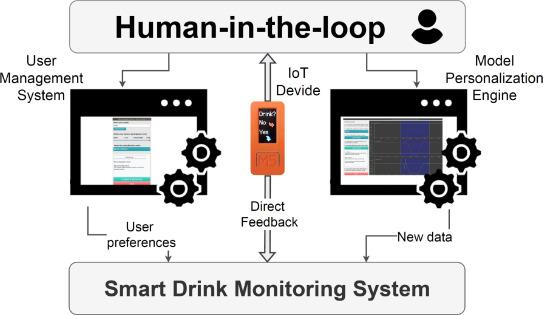

Artificial Intelligence (AI) has rapidly transformed from a futuristic concept into a vital tool that permeates various facets of modern life. At its core, AI aims to create systems capable of performing tasks that typically require human intelligence, such as learning, problem-solving, reasoning, and perception. Among the many emerging paradigms in AI development, Human-in-the-Loop (HITL) machine learning systems stand out as a unique intersection of human expertise and computational power.

The concept of HITL represents a symbiotic relationship where human feedback is continuously integrated into the machine learning process. Rather than relying solely on pre-labeled datasets or autonomous algorithms, HITL systems involve humans at critical junctures—during data labeling, model training, validation, and decision-making. This approach enhances the system’s performance, adaptability, and trustworthiness, especially in high-stakes applications.

The importance of HITL systems has grown with the increasing deployment of AI in areas demanding precision and ethical responsibility. From medical diagnostics to autonomous vehicles, from financial services to content moderation, integrating human insight ensures that AI systems can operate effectively in dynamic and complex environments. Furthermore, HITL enables iterative learning, where models are refined through continuous cycles of human feedback and machine updates.

Historically, machine learning began with rule-based systems and evolved through supervised learning, where labeled data guided model training. However, these systems often suffered from brittleness, poor generalization, and ethical blind spots. HITL emerged as a practical solution to these limitations by embedding human judgment directly into the AI lifecycle. Over time, the approach matured, supported by advances in user interface design, active learning, explainable AI, and scalable annotation tools. Today, HITL is not merely an option but a necessity in many real-world AI systems.

As we look to the future, HITL systems are expected to evolve even further, with increasing reliance on human-centered design principles and a commitment to transparency, inclusivity, and accountability. These systems will continue to redefine the interface between human cognition and artificial intelligence, empowering people to shape technology that reflects shared values and collective goals.

Foundations and Core Concepts

At its foundation, Human-in-the-Loop machine learning systems rely on the principle of collaborative intelligence. These systems are designed to leverage the strengths of both humans and machines. While machines excel at processing large volumes of data and recognizing patterns at scale, humans bring contextual awareness, ethical reasoning, and domain expertise that machines lack.

HITL systems operate through an iterative loop involving three main phases: data collection and labeling, model training and inference, and feedback and retraining. In the data collection phase, humans annotate or label data, often using specialized platforms that ensure consistency and quality. These labeled datasets form the training ground for machine learning models, typically supervised learning models.

During the training and inference phase, models are built using algorithms like decision trees, support vector machines, or deep neural networks. These models learn to map inputs to outputs based on the labeled data. Once trained, the model is deployed to make predictions or decisions on new, unseen data. However, this is not the end of the loop.

In the feedback phase, humans evaluate the model’s outputs, identifying errors, edge cases, or instances of bias. This feedback is used to retrain or fine-tune the model, ensuring continuous improvement. This iterative process allows HITL systems to adapt to evolving data distributions, changing requirements, and emerging ethical concerns.

Different HITL configurations exist depending on the level of human involvement. In proactive HITL systems, humans are involved in every decision, such as in medical diagnostics or judicial assessments. In reactive systems, human feedback is used only when the model’s confidence is low or when anomalies are detected. In mixed-initiative systems, both humans and machines can take the lead based on situational demands.

Additionally, the integration of HITL systems into operational pipelines requires careful design of user interfaces, efficient communication channels, and robust version control mechanisms. The success of HITL systems depends on how well they can align machine outputs with human expectations and ethical standards. Thus, understanding human behavior, decision-making patterns, and cognitive biases is crucial for effective HITL system design.

HITL in Real-World Scenarios

Human-in-the-loop systems are being deployed in a diverse range of sectors, reflecting their versatility and effectiveness. In healthcare, HITL plays a crucial role in diagnostic imaging, drug discovery, and patient monitoring. AI models trained on vast repositories of medical data can identify potential anomalies in X-rays, MRIs, or CT scans. However, human radiologists review these outputs to confirm findings, reduce false positives, and consider patient history—factors beyond the model’s scope.

In autonomous driving, HITL systems ensure safety and adaptability. While self-driving cars rely on sensors and machine learning algorithms to navigate, human drivers or remote operators intervene in complex or unpredictable situations. Human feedback collected from these interventions helps retrain the models to handle similar situations autonomously in the future.

In customer service, HITL systems enable intelligent chatbots that can handle routine queries but escalate complex issues to human agents. This balance improves efficiency while maintaining service quality. The data from these escalations are analyzed to update the chatbot’s training data, making the system more capable over time.

In the legal domain, HITL assists in contract analysis, e-discovery, and legal research. AI tools can scan thousands of documents to find relevant clauses or precedents, but legal experts validate and interpret the results to ensure accuracy and compliance.

Content moderation on digital platforms is another vital application. AI models detect and flag potentially harmful content such as hate speech, misinformation, or graphic violence. Human moderators review these flags, make final decisions, and provide feedback to improve the model’s sensitivity and specificity.

Beyond these domains, HITL is also making a mark in education, robotics, manufacturing, and smart cities. In education, adaptive learning platforms use AI to tailor lessons to student needs while teachers monitor and adjust content. In robotics, collaborative robots—or cobots—work alongside humans in manufacturing settings, with human input refining robotic behavior and ensuring safety. In smart cities, traffic management systems use HITL to adapt to real-time conditions and citizen feedback, enhancing urban planning and service delivery.

Technical Approaches to HITL Systems

Implementing Human-in-the-Loop (HITL) systems involves a complex blend of software tools, algorithmic techniques, infrastructure components, and workflow strategies designed to create a seamless interaction between human input and machine learning models. The goal is to ensure that human feedback is integrated in a scalable, reliable, and efficient manner across the AI lifecycle.

Data Annotation and Labeling Infrastructure

The foundation of any HITL system lies in high-quality labeled data. Data annotation tools serve as the bridge between human experts and raw data, enabling consistent and scalable labeling. These tools must support various data types—text, image, audio, video, and structured records—and ensure quality through features like consensus scoring, annotation guidelines, and inter-annotator agreement metrics.

Popular tools like Labelbox, Prodigy, Amazon SageMaker Ground Truth, and SuperAnnotate offer advanced features such as annotation templates, custom workflows, and built-in quality control mechanisms. For specialized applications, such as medical imaging or legal document review, custom labeling interfaces are often developed with domain-specific visualizations and contextual information.

Active Learning and Query Strategies

Active learning is a cornerstone of HITL systems, enabling the model to selectively query the human for the most informative or uncertain data points. The most common strategies include uncertainty sampling, query-by-committee, and expected model change. Libraries like modAL and ALiPy offer modular frameworks to implement these strategies. Integration with annotation platforms allows real-time querying and labeling, creating a feedback loop that incrementally improves model performance with minimal annotation effort.

Model Training and Fine-Tuning Pipelines

Once labeled data is collected, HITL systems require robust training pipelines that support iterative development. Frameworks such as TensorFlow, PyTorch, and Hugging Face Transformers are commonly used to build, train, and fine-tune models. Techniques like transfer learning, few-shot and zero-shot learning, and reinforcement learning with human feedback (RLHF) are frequently employed. These training processes are typically containerized and orchestrated using tools such as Docker, Kubernetes, and Apache Airflow to ensure scalability and reproducibility.

Feedback Integration and Iterative Loop Management

Effective HITL systems must efficiently collect, analyze, and integrate human feedback into ongoing model development. This involves feedback capture interfaces, real-time model updates using online learning or streaming data pipelines (e.g., Apache Kafka or Apache Flink), and batch retraining. Versioning tools like MLflow, Weights & Biases, and DVC help track model states, data lineage, and annotation provenance.

Explainable AI (XAI) and Human Interpretability

Interpretability is a critical requirement in HITL systems, especially in regulated industries or life-critical applications. Explainable AI techniques help human collaborators understand and trust the model’s outputs. Common tools include LIME, SHAP, and Captum. These tools are often integrated into HITL dashboards, allowing annotators or decision-makers to see not just what the model predicted but why it made a particular decision.

Collaboration and Human Interface Design

The design of the human interface in a HITL system is not merely aesthetic but functional. Interfaces must facilitate effective human oversight, collaboration, and intervention. Design principles include cognitive load minimization, role-specific dashboards, and feedback granularity. Interfaces are typically web-based and built using frameworks like React, Dash, or Streamlit, with integration to backend ML services via RESTful APIs or gRPC.

Infrastructure and Deployment Considerations

Deploying HITL systems requires scalable, secure, and resilient infrastructure. Key components include cloud services (AWS, GCP, Azure), CI/CD pipelines (Jenkins, GitHub Actions, Argo CD), security protocols (RBAC, data encryption, audit logging), and edge computing for latency-sensitive applications. Hybrid architectures are common, where training occurs in the cloud while inference and feedback loops operate locally.

Challenges, Limitations, and Ethical Considerations (1,200–1,400 words)

Despite the transformative potential of Human-in-the-Loop (HITL) machine learning systems, their implementation and deployment are riddled with challenges and limitations. These obstacles span technical, organizational, and ethical domains, and addressing them is crucial for building effective, equitable, and sustainable HITL systems.

5.1 Scalability and Operational Complexity

One of the foremost technical challenges in HITL systems is scalability. Involving humans in the loop inherently introduces latency, bottlenecks, and resource constraints. Unlike purely automated systems, HITL architectures must accommodate human input, which can be slow, inconsistent, or unavailable at scale. As data volumes grow, so does the demand for human annotators or reviewers, which may not be feasible in real-time or cost-effective scenarios.

Operationalizing HITL systems also requires maintaining complex pipelines that combine human workflows with machine processes. Synchronizing model training with data labeling cycles, ensuring annotation quality, managing retraining triggers, and orchestrating feedback loops demand significant engineering overhead. Tooling such as workflow automation platforms, human task management dashboards, and robust APIs is essential—but these solutions come with their own maintenance costs.

5.2 Quality and Consistency of Human Feedback

HITL systems depend heavily on the quality of human input, which can be inconsistent or biased. Annotators may differ in their interpretations, especially in subjective domains like content moderation, sentiment analysis, or legal assessment. Inconsistencies lead to noisy training data, which in turn affects model reliability.

Efforts to mitigate this include consensus scoring, inter-annotator agreement metrics, and continuous training of annotators. However, achieving high-quality annotations remains resource-intensive. In global systems, additional complexity arises from cultural differences, language ambiguities, and local norms, all of which influence how data is labeled and interpreted.

Crowdsourcing platforms like Amazon Mechanical Turk, while cost-effective, may result in lower quality annotations compared to domain experts. However, using specialists (e.g., doctors, lawyers, engineers) is expensive and time-consuming, creating a trade-off between cost, speed, and accuracy.

5.3 Human Fatigue and Burnout

Another limitation of HITL systems is the potential for human fatigue and burnout, particularly when annotators are exposed to monotonous or distressing content. In content moderation tasks, for instance, individuals must review violent or offensive material, leading to psychological stress. Over time, fatigue reduces annotation accuracy, increases error rates, and can even result in long-term mental health consequences.

To address this, companies are implementing wellness programs, rotating high-stress tasks, using AI pre-screening to filter content, and providing mental health support. Even so, the emotional toll on annotators remains a pressing concern that cannot be entirely eliminated through technical means.

5.4 Bias, Fairness, and Representativeness

While HITL systems are often introduced to mitigate algorithmic bias, they can inadvertently perpetuate or even amplify human biases. If the annotators themselves harbor unconscious biases—whether cultural, gender-based, racial, or political—those biases become embedded in the training data.

Moreover, the demographic homogeneity of annotators (e.g., annotators from one geographic region labeling global content) can lead to models that perform poorly for underrepresented groups. This has profound implications in domains such as healthcare, hiring, finance, and criminal justice, where biased AI decisions can reinforce social inequalities.

Techniques like bias auditing, diverse annotation teams, de-biasing algorithms, and fairness-aware model training help alleviate these issues but require proactive governance and continuous oversight. Merely involving humans is not enough; it is critical to ensure that human input is diverse, well-informed, and ethically aligned.

5.5 Cost and Resource Constraints

HITL systems are inherently more expensive than fully automated systems due to the ongoing need for human labor. Labeling datasets, reviewing model outputs, and iteratively providing feedback require sustained human effort, which translates into higher operational costs. In commercial applications where speed and cost-efficiency are critical, this can be a major deterrent.

Additionally, many organizations lack the infrastructure or budget to support HITL pipelines. Even when cloud services and open-source tools are available, small companies or startups may struggle to assemble the necessary talent (e.g., ML engineers, UX designers, subject matter experts) to build and maintain HITL systems.

5.6 Security, Privacy, and Data Governance

Involving humans in data pipelines raises concerns about data security and privacy, particularly when handling sensitive or personal information. Data shared with annotators—especially via third-party platforms—may be susceptible to breaches, leaks, or misuse.

Ensuring compliance with data protection regulations (such as GDPR, HIPAA, or CCPA) becomes a significant challenge. Organizations must implement strict access controls, anonymization techniques, audit logs, and secure environments for annotation tasks.

Furthermore, human annotators may inadvertently leak proprietary or confidential insights through negligence or malicious intent. Training and legal agreements (e.g., NDAs) offer some protection, but technological solutions like differential privacy and homomorphic encryption are increasingly being explored to mitigate these risks.

5.7 Ethical Implications and Moral Responsibility

The deployment of HITL systems raises profound ethical questions about accountability, autonomy, and the human role in AI decision-making. When machines are used to make or assist in life-altering decisions—such as diagnosing diseases, approving loans, or recommending prison sentences—who is ultimately responsible for errors?

HITL systems aim to keep humans “in the loop” precisely to maintain accountability. However, if the human role becomes reduced to rubber-stamping or approving pre-made recommendations, true oversight may be lost. This phenomenon, known as automation bias, occurs when humans overly trust machine outputs and fail to intervene appropriately.

Designing HITL systems that empower humans to challenge, override, or audit AI decisions is crucial for ethical integrity. Explainability, transparency, and accountability mechanisms must be baked into the system from the outset, not retrofitted as an afterthought.

5.8 Labor Ethics and Workforce Sustainability

A frequently overlooked aspect of HITL systems is the labor ethics of data annotators, particularly in gig economies. Many workers on crowdsourcing platforms operate under precarious conditions—low pay, lack of benefits, and minimal job security. These labor practices raise questions about exploitation, dignity, and fairness in the AI supply chain.

Responsible AI development must include fair compensation, clear task guidelines, and respectful treatment of annotators. Initiatives like the Fairwork Foundation and guidelines from organizations such as Partnership on AI advocate for ethical data annotation practices and worker rights.

Additionally, the potential deskilling of human workers—where people are relegated to repetitive labeling tasks without skill development—poses long-term societal risks. HITL systems should be designed not only to leverage human input but also to enrich human capabilities through upskilling and collaborative engagement.

sitive data—medical records, legal documents, or personal communications. Ensuring data privacy, security, and compliance with regulations like GDPR or HIPAA is critical. Establishing accountability frameworks for decisions made jointly by humans and machines is equally important.

Despite these challenges, the advantages of HITL systems are too significant to ignore. The key lies in developing ethical guidelines, transparent processes, and adaptive technologies that enhance human-machine collaboration without compromising integrity or inclusiveness.

Evaluation Metrics and System Validation

Evaluating the performance of Human-in-the-Loop systems requires a multidimensional approach that encompasses both traditional machine learning metrics and human-centered factors. Metrics such as accuracy, precision, recall, and F1-score are essential for understanding how well the model performs on labeled tasks. However, these need to be supplemented with indicators of human-machine collaboration quality.

Inter-rater reliability is one such metric that assesses the consistency among human annotators. High agreement indicates a reliable ground truth, while low agreement suggests ambiguity or inconsistent training. Task completion time, user satisfaction, and the frequency of human overrides are also valuable indicators of system performance and usability.

Explainability and interpretability play crucial roles in HITL validation. If users can understand model predictions, they are more likely to provide constructive feedback and correct errors. Tools like SHAP (SHapley Additive exPlanations), LIME (Local Interpretable Model-agnostic Explanations), and attention maps help interpret complex models, fostering better collaboration.

Simulation environments are often used to test HITL systems under various conditions. These allow designers to study system behavior, identify failure modes, and refine feedback mechanisms without deploying the model in the real world. A/B testing is another effective strategy, comparing HITL-enhanced systems against fully automated or fully manual systems to quantify improvements.

The evaluation process should also consider long-term outcomes. Are HITL systems reducing bias over time? Are they improving generalization and robustness? Monitoring these trends helps ensure that HITL systems remain effective, ethical, and aligned with user expectations.

Future Directions and Innovations

The future of Human-in-the-Loop machine learning is poised for rapid innovation, driven by emerging technologies, societal needs, and the growing emphasis on ethical AI. One promising direction is the integration of federated learning with HITL. Federated learning allows models to be trained across decentralized devices or servers without transferring sensitive data. Combining this with HITL mechanisms can enhance privacy, personalization, and responsiveness.

Another frontier is the use of edge computing in HITL systems. By bringing computation closer to the data source, edge AI reduces latency and enables real-time human feedback in environments like manufacturing lines, autonomous drones, and smart homes. This decentralization expands the reach of HITL beyond centralized cloud-based systems.

The rise of generative AI tools also opens new opportunities for HITL. Systems like ChatGPT or DALL·E can generate high-quality text or images but often need human oversight to ensure relevance, accuracy, and appropriateness. HITL workflows can fine-tune these outputs for specific use cases, such as creative writing, product design, or customer engagement.

In the realm of policy and governance, HITL systems are being explored as tools for transparent and accountable decision-making. Governments and organizations are increasingly looking to embed human oversight in automated systems to ensure compliance with ethical standards and democratic values. This includes AI in judicial sentencing, welfare distribution, and urban planning.

Education and workforce development will also benefit from HITL systems. Intelligent tutoring systems that combine AI feedback with teacher input can provide personalized learning experiences at scale. In professional training, HITL simulations can prepare workers for complex scenarios by integrating real-time feedback and adaptive content delivery.

As quantum computing matures, it may enhance HITL systems by solving optimization problems faster and enabling more sophisticated simulations. This could improve how HITL systems model uncertainty, handle large-scale feedback, and adapt to complex environments.

Ultimately, the future of HITL will be shaped by a commitment to inclusivity, transparency, and shared benefit. It will involve not just technological advances but also cultural and institutional changes that empower people to participate meaningfully in shaping AI systems.

Conclusion

Human-in-the-Loop machine learning systems represent a powerful convergence of human insight and artificial intelligence. They provide a framework where the strengths of each complement the limitations of the other, creating systems that are more accurate, ethical, and responsive.

The journey from rule-based systems to collaborative AI highlights the growing recognition that human oversight is not a hindrance but a strength. Whether it’s diagnosing diseases, moderating online content, or designing safer autonomous systems, HITL approaches ensure that machines remain grounded in human values and contextual understanding.

Despite challenges such as scalability, bias, and system complexity, the benefits of HITL systems make them indispensable in many applications. The path forward involves thoughtful design, robust evaluation, and ongoing collaboration between engineers, domain experts, ethicists, and end-users.

As we navigate an era where AI increasingly influences decisions that affect lives and societies, HITL systems offer a blueprint for responsible AI. They enable us to build technology that listens, learns, and evolves in partnership with humanity—turning AI from a tool of automation into a collaborator for progress.