Emotion Recognition and Sentiment Analysis with Deep Learning

Uncategorized

Emotion recognition and sentiment analysis are pivotal areas in the field of artificial intelligence (AI) that strive to understand human emotions through data such as text, speech, facial expressions, and physiological signals. These techniques have gained significant attention due to the vast availability of user-generated data on digital platforms. Deep learning, a powerful branch of machine learning inspired by the human brain’s neural networks, has significantly enhanced the effectiveness and scope of emotion and sentiment analysis. As a result, deep learning models are now widely employed in various sectors, including healthcare, customer service, marketing, education, entertainment, and beyond.

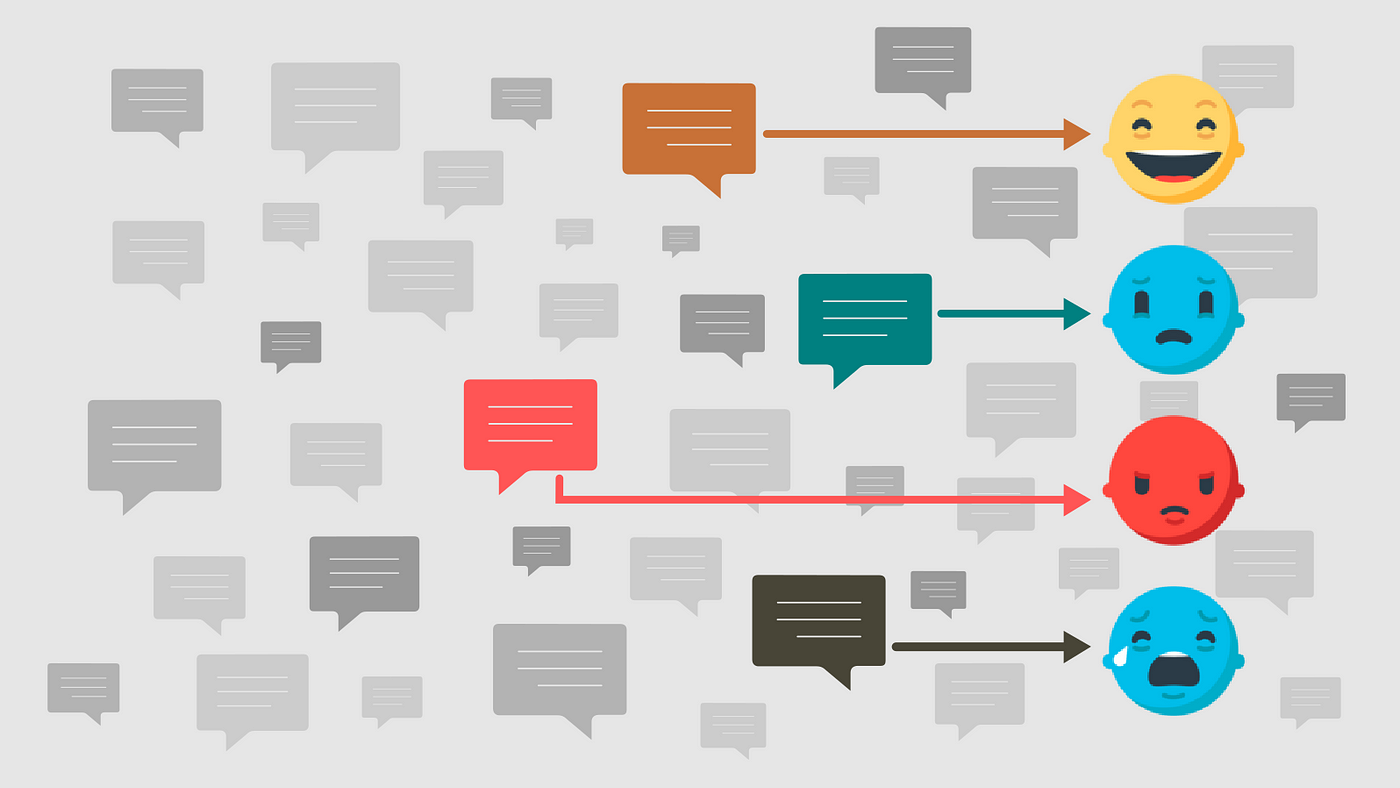

Emotion recognition refers to the process of identifying emotions like happiness, sadness, anger, surprise, fear, and disgust by analyzing various human inputs. It aims to recognize not just the presence of emotion, but its intensity and context. Sentiment analysis, on the other hand, is often used in the realm of natural language processing (NLP) to determine the sentiment expressed in a body of text—typically classifying it as positive, negative, or neutral. While both concepts deal with affective computing, emotion recognition tends to be more granular and complex, involving multidimensional affective states.

In the early stages, traditional machine learning models such as Support Vector Machines (SVMs), Naïve Bayes classifiers, and Decision Trees were employed. These models relied heavily on handcrafted features and domain-specific knowledge. They faced limitations in terms of generalization and adaptability, especially when dealing with large, unstructured, and complex datasets. With the advent of deep learning, the focus shifted to automatic feature extraction, enabling better performance and generalization across multiple tasks.

The adoption of neural networks revolutionized textual sentiment analysis. Initially, approaches like Bag-of-Words (BoW) and Term Frequency-Inverse Document Frequency (TF-IDF) were utilized, which lacked the ability to understand semantics or word order. This limitation was addressed through word embeddings such as Word2Vec and GloVe, which positioned words in a continuous vector space, allowing models to comprehend semantic relationships. Subsequently, transformer-based architectures like BERT (Bidirectional Encoder Representations from Transformers), RoBERTa, and GPT demonstrated unparalleled performance due to their ability to capture long-range dependencies and contextual relationships.

Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks, have shown efficacy in processing sequential and spatial data. CNNs, although originally designed for image processing, have been adapted for analyzing text by treating word sequences like pixel grids. RNNs and LSTMs are especially valuable in capturing sequential dependencies in language and are used for both text-based sentiment analysis and speech emotion recognition.

Speech-based emotion recognition is a vibrant area of research and application. Human speech carries rich emotional information embedded in tone, pitch, tempo, and prosody. Features such as Mel-frequency cepstral coefficients (MFCCs), spectral contrast, and energy are typically extracted from audio signals and used as inputs to deep learning models. CNNs are often used to process spectrograms, while LSTMs and attention-based models help capture temporal patterns. Emotion detection from speech is widely used in virtual assistants, call centers, and emotion-aware devices.

Facial expression analysis is another critical modality. Deep CNNs trained on large facial image datasets such as FER-2013, AffectNet, and CK+ are capable of identifying subtle variations in facial movements. These networks are adept at capturing local and global facial features like eyebrow positioning, eye widening, and lip movement. 3D Convolutional Neural Networks and hybrid CNN-RNN architectures have been developed to analyze facial emotions over time in video data, improving dynamic emotion recognition accuracy.

Multimodal emotion recognition integrates inputs from text, speech, facial expressions, and sometimes physiological data such as EEG and heart rate. This holistic approach leverages the strengths of different modalities to enhance robustness and accuracy. Fusion techniques are categorized as early fusion (combining features before modeling), late fusion (combining outputs), and hybrid fusion. Multimodal deep learning models like Multimodal Transformer and Tensor Fusion Network have been proposed to handle cross-modal alignment and representation.

The performance of emotion and sentiment recognition models is highly dependent on the quality of training data. Text datasets like the Stanford Sentiment Treebank (SST), IMDb, Amazon Reviews, and Yelp Reviews are used for sentiment classification. For speech emotion recognition, datasets like IEMOCAP, RAVDESS, CREMA-D, and EmoDB are widely utilized. Visual datasets include FER+, AffectNet, and JAFFE. Multimodal datasets like CMU-MOSEI, CMU-MOSEAS, and MELD provide synchronized text, audio, and visual signals. However, challenges such as label ambiguity, class imbalance, and noise in annotations persist.

One of the enduring challenges in emotion and sentiment analysis is subjectivity. Emotional expressions vary not only from person to person but also across cultural, social, and situational contexts. This variability complicates model generalization and makes it difficult to benchmark performance. Furthermore, sarcasm, irony, metaphors, and cultural idioms often confuse sentiment classifiers, especially in the absence of explicit context.

Bias in datasets and models is another concern. Overrepresentation of certain demographic groups can lead to biased predictions and exacerbate social inequalities. Deep learning models trained on biased data may inherit and amplify those biases. There is a growing movement toward ethical AI that emphasizes fairness, accountability, and transparency in model training, deployment, and evaluation.

Interpretability and explainability are crucial for building trust in AI systems, especially in sensitive domains like healthcare and law. Techniques such as attention heatmaps, SHAP values, and LIME have been introduced to explain model predictions. Explainable AI (XAI) is an ongoing area of research aiming to make deep learning models more transparent and understandable to non-experts.

Privacy and ethical concerns must also be addressed, particularly in applications involving facial recognition or emotion tracking. Users must be informed about how their data is used, and systems must comply with regulations like GDPR. There is a fine line between helpful personalization and intrusive surveillance. Maintaining this balance is essential to ensure public trust and acceptance.

Real-world applications of emotion recognition and sentiment analysis are vast and growing. In the business sector, they are used for analyzing customer feedback, automating responses, and improving product recommendations. In healthcare, they assist in mental health diagnosis, emotional well-being tracking, and even robotic companionship. In education, systems can adapt content delivery based on student emotions, thereby enhancing engagement and learning outcomes.

Social media monitoring is another key application. Governments, political organizations, and businesses use sentiment analysis tools to assess public opinion, detect emerging trends, and manage crises. In entertainment, video games and interactive media are increasingly incorporating emotional responsiveness to create more immersive experiences. Virtual assistants and chatbots are being designed to recognize and respond to user emotions, making interactions more natural and effective.

The automotive industry is also exploring emotion recognition to enhance safety. By analyzing drivers’ facial expressions, voice tone, and physiological signals, systems can detect fatigue, stress, or distraction and initiate preventive actions. Similarly, smart home devices are integrating emotion recognition to provide personalized environments—adjusting lighting, music, and temperature based on the user’s mood.

As research progresses, new frontiers in emotion and sentiment analysis are emerging. Self-supervised learning and semi-supervised learning are being explored to overcome the scarcity of labeled data. These techniques leverage unlabeled data for pre-training, followed by fine-tuning on small labeled datasets. Few-shot and zero-shot learning methods are also gaining attention for their potential to generalize across tasks and domains with minimal supervision.

Edge computing is enabling the deployment of emotion recognition models on resource-constrained devices such as smartphones, wearables, and IoT systems. This development allows real-time emotion tracking while preserving user privacy by avoiding data transmission to cloud servers. Moreover, federated learning is being investigated to train models collaboratively across devices without sharing raw data.

Cross-cultural and multilingual models are being developed to ensure inclusivity. Emotions are often expressed differently across cultures, and language-specific sentiment lexicons may not generalize well. Multilingual transformers like mBERT and XLM-RoBERTa are being employed to build models that can operate across multiple languages and dialects.

Future systems may incorporate neuro-symbolic AI, which combines the learning capabilities of neural networks with the reasoning power of symbolic systems. Such models can provide better interpretability and generalization, addressing some of the current limitations in emotion and sentiment analysis.

To conclude, the field of emotion recognition and sentiment analysis with deep learning is both vibrant and transformative. As technology advances and interdisciplinary collaborations flourish, we can expect more accurate, ethical, and personalized systems. These technologies are poised to revolutionize human-computer interaction, empower businesses and healthcare systems, and enrich daily life with emotionally intelligent interfaces.

The integration of emotion recognition in robotics marks another pivotal evolution in the field. Robots equipped with emotion-sensing capabilities can enhance user interaction in caregiving, hospitality, and education. By reading emotional cues, such robots can offer companionship to the elderly, adapt their teaching style for students, or respond empathetically in customer service roles. Deep reinforcement learning is being explored to make these robots capable of adapting their behaviors based on emotional feedback, leading to truly dynamic human-robot interactions.

Additionally, wearable technologies are now incorporating emotion tracking as a built-in feature. Devices such as smartwatches, fitness trackers, and smart glasses monitor physiological signals like heart rate variability, skin temperature, and electrodermal activity to infer emotional states. These readings are then processed by deep learning models to offer users insights into their mood patterns and stress levels. Wearable-based emotion recognition is gaining traction in personalized health monitoring, workplace wellness programs, and athletic performance enhancement.

Gaming and virtual reality (VR) also represent promising domains. Emotion-aware VR experiences can adjust in real time based on a player’s emotional feedback, offering deeper immersion and engagement. For instance, a horror game may adjust the intensity of its scenes based on the player’s fear level, detected via heart rate or facial expressions. These innovations promise to revolutionize how players experience digital environments, blurring the line between real and virtual emotional interactions.

Legal and forensic applications of emotion recognition are increasingly being studied. For example, analyzing emotional tone in witness testimonies, interviews, or courtroom behavior can help assess credibility or detect stress. While these applications must be approached cautiously, with strict ethical guidelines, they hold potential in supporting legal investigations and understanding human behavior in legal contexts.

In marketing and advertising, sentiment and emotion analysis help tailor content that resonates emotionally with audiences. Brands monitor reactions to campaigns on social media, analyzing whether the sentiment is in alignment with the brand’s image. Deep learning helps uncover underlying emotions that text analytics alone may miss, enabling more targeted and effective marketing strategies.

On a scientific front, the study of emotional dynamics—how emotions evolve over time—is gaining attention. Temporal emotion modeling using deep sequential models or transformers is being used to understand mood shifts, emotional trajectories, and patterns. This research is valuable in psychotherapy, behavior prediction, and improving adaptive systems.

Finally, the evolution of foundational AI models, including large multimodal models like GPT-4, DALL·E, and CLIP, promises a more integrated understanding of emotion. These models can process and relate information from text, images, and audio, offering a unified framework for affective computing. As generalist AI systems evolve, they are expected to offer holistic emotional intelligence, blending perception, cognition, and response.

In essence, emotion recognition and sentiment analysis are no longer confined to academic research. They are embedded in daily technologies, redefining how humans interact with machines. With ongoing advancements in data collection, computational power, and ethical governance, these AI capabilities are expected to grow more human-centric, intuitive, and indispensable in the near future.