NLP for Knowledge Extraction from Scientific Literature

Uncategorized

Introduction

The exponential growth of scientific literature presents a unique dual-edged sword. On one side, it reflects the thriving nature of global research and innovation, with new findings constantly pushing the boundaries of what we know. On the other side, it contributes to an overwhelming surge of information that is increasingly difficult to manage, consume, and synthesize. With over 2.5 million scholarly articles published annually across domains such as medicine, computer science, physics, and environmental science, the sheer volume of data poses a significant challenge for researchers, data scientists, and academic institutions alike. Staying up-to-date with the latest advancements, identifying relevant studies, or conducting comprehensive literature reviews has become a daunting task, often leading to research redundancy, delays in discovery, and overlooked insights. Most of this scientific knowledge is embedded within unstructured text, such as academic journals, PDFs, dissertations, and conference proceedings, which are not easily searchable or machine-readable. This creates a critical bottleneck in the research lifecycle, as traditional manual methods of reading, annotating, and synthesizing papers are no longer scalable.

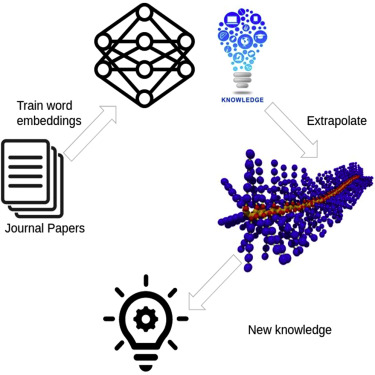

Natural Language Processing (NLP), a branch of artificial intelligence focused on enabling computers to understand and process human language, has emerged as a transformative solution to this challenge. NLP offers the ability to automatically interpret, extract, organize, and summarize information from scientific texts. By leveraging machine learning, linguistic analysis, and deep learning models, NLP allows systems to comprehend complex scientific language, identify key entities such as genes, compounds, or algorithms, understand relationships among them, and present this information in structured, usable formats. The ability to process thousands of documents rapidly not only saves time but also helps uncover hidden patterns, connections, and emerging trends that might go unnoticed through manual analysis. For example, a biomedical researcher could use NLP tools to mine thousands of articles for information about gene-disease associations, while a climate scientist might track research trends related to global warming and carbon emissions across decades of published work.

One of the primary reasons NLP is becoming indispensable is due to the increasingly critical problem of information overload. The more research that is published, the harder it becomes to filter through noise and identify genuinely relevant or high-impact work. This leads to significant inefficiencies in knowledge dissemination and application. Researchers risk missing important findings simply because they are buried in volumes of unrelated content. Policymakers and funding agencies may overlook key developments in emerging fields. Organizations seeking to make data-driven decisions can struggle to integrate the latest scientific insights into their processes. NLP helps alleviate this burden by automating key tasks such as named entity recognition (NER), which identifies domain-specific terms like drug names, biological pathways, or technological concepts; relation extraction, which connects these entities (e.g., a drug treats a disease); and document classification, which sorts papers into relevant topics or disciplines.

Another crucial application of NLP in this space is summarization. Scientific articles are often dense, technical, and lengthy, making it difficult to quickly understand their core contributions. NLP-powered summarization techniques—both extractive and abstractive—can generate concise summaries or bullet-pointed highlights, enabling researchers to scan papers more efficiently. Additionally, topic modeling techniques like Latent Dirichlet Allocation (LDA) can group large collections of articles into thematic clusters, helping users discover new connections or identify knowledge gaps. All of these capabilities are further enhanced by advances in deep learning and transformer models, such as BERT, SciBERT, and BioBERT, which are pre-trained on vast corpora of scientific and biomedical texts to understand context, syntax, and semantics at a higher level of accuracy.

The practical applications of NLP in scientific literature analysis are broad and impactful. Academic researchers can use NLP-powered tools to streamline systematic literature reviews, identify key papers for citation, and track emerging research trends. Pharmaceutical companies apply NLP to explore new drug repurposing opportunities, detect potential adverse drug reactions, or mine clinical trial data. Healthcare providers benefit from NLP systems that match patients to suitable clinical trials by cross-referencing eligibility criteria with medical histories. Government agencies and policy think tanks can monitor the state of scientific knowledge on pressing societal issues, such as climate change or pandemic preparedness, using automated literature analysis. Even technology firms use NLP to analyze patents, technical reports, and research publications to inform R&D strategies and innovation pipelines.

Looking ahead, the future of NLP in scientific literature holds even greater promise. We are on the cusp of integrating multimodal AI capabilities that will allow systems not only to read text but also to interpret scientific charts, diagrams, and tables—components that often carry crucial data. Personalized research assistants powered by NLP could alert scientists to newly published papers matching their interests or even draft initial literature reviews. Moreover, as NLP models become more accurate and context-aware, they could support scientific hypothesis generation, offering suggestions for unexplored research avenues based on existing knowledge graphs and literature databases.

The Need for Knowledge Extraction in Scientific Research

Scientific literature is inherently complex, often laden with domain-specific jargon, varied formats, and lengthy discussions. Traditional keyword-based searches fall short in identifying nuanced relationships or discovering novel insights. Knowledge extraction via NLP addresses these issues by:

- Identifying key entities and relationships in text.

- Summarizing long documents into digestible formats.

- Clustering related research works for topic modeling.

- Tracking the evolution of scientific concepts over time.

Such capabilities are essential for accelerating innovation, promoting interdisciplinary collaboration, and guiding research funding and policy decisions.

Core NLP Techniques for Knowledge Extraction

To effectively extract knowledge from scientific documents, NLP systems typically rely on a blend of classic and modern methodologies:

1. Named Entity Recognition (NER)

NER involves identifying and classifying entities like authors, institutions, chemicals, genes, and methods within scientific text. For instance, in biomedical literature, NER helps in detecting drug names, diseases, and protein interactions.

2. Part-of-Speech Tagging and Dependency Parsing

These foundational techniques enable a deeper understanding of sentence structure, helping systems parse syntactic relationships and extract relevant phrases.

3. Relation Extraction

Once entities are recognized, relation extraction identifies the links between them. This is crucial for building knowledge graphs that represent interconnected scientific concepts.

4. Text Classification

Scientific documents are classified based on topics, methods, findings, or types (e.g., clinical trials, meta-analyses). Classification helps in organizing literature and directing relevant research to stakeholders.

5. Summarization

NLP-based summarization tools condense research papers into short abstracts, helping readers quickly determine the relevance of a document.

6. Topic Modeling and Clustering

Techniques like Latent Dirichlet Allocation (LDA) identify hidden topics across large corpora, assisting in literature reviews and discovery of emerging trends.

7. Semantic Search and Question Answering

Instead of relying on keyword matches, semantic search systems understand the context of user queries, returning more accurate and insightful results.

Advanced NLP Models and Tools

Recent breakthroughs in deep learning and transformer-based models have significantly improved the accuracy of NLP systems in handling scientific texts.

1. BERT and Its Variants

Bidirectional Encoder Representations from Transformers (BERT) and domain-specific variants like SciBERT and BioBERT have revolutionized NLP by understanding the context of words in sentences. These models outperform traditional methods in tasks like NER, classification, and summarization.

2. GPT and Large Language Models (LLMs)

Generative models like GPT-4 and PaLM can generate human-like summaries, answer scientific questions, and even write literature reviews. They are instrumental in creative and analytical aspects of literature mining.

3. T5 (Text-to-Text Transfer Transformer)

T5 treats every NLP task as a text-generation problem, offering a unified approach for summarization, classification, translation, and question answering.

4. Knowledge Graph Construction Tools

Frameworks like OpenKE, Stanford CoreNLP, and spaCy help in building domain-specific knowledge graphs from extracted entities and relationships.

Applications of NLP in Scientific Domains

NLP-driven knowledge extraction has found widespread applications across various scientific disciplines:

1. Biomedical Research

- Extracting gene-disease-drug relationships.

- Mining clinical trial outcomes.

- Assisting in systematic reviews and meta-analyses.

2. Environmental Sciences

- Understanding the impact of climate change from vast research.

- Tracking environmental policy changes and their outcomes.

3. Material Science and Chemistry

- Discovering new materials and compounds through literature mining.

- Mapping synthesis procedures and reaction pathways.

4. Physics and Engineering

- Identifying theoretical models and experimental techniques.

- Tracing the development of technologies over time.

5. Social Sciences and Humanities

- Analyzing discourse trends.

- Mapping historical research trajectories.

Integration with Research Workflows

NLP tools are increasingly being integrated into research and publication platforms, aiding scholars throughout the research lifecycle:

- Literature Discovery: Enhanced recommendation systems.

- Manuscript Writing: AI-assisted writing aids.

- Peer Review: NLP tools for plagiarism detection, readability checks, and summarization.

- Data Extraction: Automatic tabulation of data points from tables and figures.

Challenges and Limitations

Despite its promise, NLP-based knowledge extraction faces several challenges:

1. Domain-Specific Language

Scientific texts often contain specialized terminologies and abbreviations that are difficult for general NLP models to understand.

2. Limited Labeled Data

High-quality, annotated datasets are essential for training supervised NLP models, but such resources are scarce in many domains.

3. Ambiguity and Polysemy

Words with multiple meanings (polysemy) or vague expressions pose difficulties for entity and relation extraction.

4. Evaluation and Benchmarking

Measuring the effectiveness of knowledge extraction tools remains challenging, with few standard benchmarks available.

5. Ethical and Reproducibility Concerns

Bias in training data, lack of model transparency, and reproducibility issues can impact the trustworthiness of AI-generated insights.

Future Trends and Innovations

As research in NLP and AI advances, the following trends are likely to shape the future of knowledge extraction from scientific literature:

1. Self-Supervised Learning

Techniques that reduce the dependency on labeled data by learning from raw text are gaining traction.

2. Multilingual and Cross-Lingual Models

With global collaboration in science, models that can process texts across languages will be essential.

3. Explainable AI (XAI)

Efforts are underway to make NLP models more interpretable, especially for critical applications in healthcare and policy-making.

4. Interactive and Conversational Interfaces

Conversational agents and chatbots powered by LLMs will allow users to query scientific literature in a natural, interactive manner.

5. Integration with Knowledge Graphs

Combining NLP with semantic web technologies will enable more robust and scalable knowledge representation.

Case Studies

1. CORD-19 Dataset

Developed in response to the COVID-19 pandemic, the CORD-19 dataset facilitated global collaboration in mining coronavirus-related literature. NLP tools helped track emerging treatments, vaccine developments, and epidemiological trends.

2. Semantic Scholar’s AI Tools

Semantic Scholar incorporates AI-powered tools like TLDR (Too Long; Didn’t Read) summaries, citation context analysis, and influential paper detection to assist researchers in navigating scientific literature.

3. PubMed NLP Applications

NLP models applied to PubMed have significantly accelerated literature reviews, entity tagging, and relationship mapping in biomedical research.

Conclusion

Natural Language Processing (NLP) is revolutionizing the way scientific knowledge is discovered, organized, and utilized in the modern research landscape. As the volume of scholarly literature continues to grow exponentially, along with its complexity, traditional methods of manual analysis and literature review are becoming increasingly inefficient and unsustainable. NLP-based knowledge extraction provides a scalable, intelligent solution that enables researchers to navigate this overwhelming sea of information with speed and accuracy. By transforming unstructured text from research papers, journals, and technical documents into structured, machine-readable data, NLP makes it possible to uncover hidden patterns, extract key findings, and map relationships between scientific concepts.

This automation not only saves valuable time but also significantly enhances the overall quality and efficiency of research processes. By reducing the likelihood of missing critical information, NLP helps researchers uncover connections and insights that may have otherwise gone unnoticed. It allows for comprehensive literature coverage across multiple disciplines, fostering interdisciplinary collaboration and innovation. With the burden of manual data gathering lifted, researchers can allocate more energy toward formulating hypotheses, designing experiments, analyzing results, and pushing the boundaries of scientific knowledge and discovery.

Moreover, the development of domain-specific NLP models tailored to specialized fields such as biomedicine, physics, environmental science, and materials engineering has significantly improved the precision and relevance of knowledge extraction. These models are trained on field-specific terminology and research patterns, enabling more accurate interpretation of complex scientific texts. In parallel, advancements in explainable AI enhance trust by making the decision-making processes of NLP systems more transparent. Multilingual NLP capabilities are also expanding access to global research by breaking language barriers. As these technologies continue to evolve, NLP will become indispensable in scientific workflows—accelerating discovery, enhancing collaboration, and supporting data-driven decision-making worldwide.