Memristor-Based AI Accelerators

Uncategorized

Introduction

As artificial intelligence (AI) becomes ubiquitous across industries—from autonomous vehicles and healthcare to smart cities, industrial automation, and robotics—the demand for faster, more efficient computing architectures is surging. Traditional digital processors such as CPUs and GPUs, while powerful, are increasingly constrained by their energy consumption and data movement inefficiencies. This is particularly problematic for AI workloads, which involve large-scale matrix operations, high memory bandwidth, and real-time responsiveness. To address these bottlenecks, researchers and engineers are turning to memristor-based AI accelerators—an emerging class of hardware that promises to redefine how AI is processed at both edge and cloud levels.

Memristors, short for “memory resistors,” are two-terminal, non-volatile electronic devices that simultaneously function as memory and computation units. Unlike conventional digital architectures that separate memory and processing—creating the notorious “von Neumann bottleneck”—memristors allow for in-memory computing. This architectural innovation minimizes data movement between memory and processor, significantly reducing latency, power consumption, and system complexity. These attributes make memristors exceptionally well-suited for AI applications that demand high-speed and energy-efficient computation.

One of the key strengths of memristors lies in their ability to store and process data in analog form. Neural networks, the backbone of most AI systems, rely heavily on operations like matrix-vector multiplications. Memristor arrays can perform these computations directly and in parallel using their physical properties, leading to a massive increase in computational efficiency. This parallelism allows for faster inference and lower power draw, especially valuable in edge AI scenarios such as wearable devices, mobile applications, drones, and autonomous systems where power and space are limited.

Moreover, memristors are a natural fit for neuromorphic computing, which aims to mimic the structure and operation of the human brain. Their analog behavior, multi-state memory capabilities, and compatibility with spiking neural networks make them essential components in building brain-inspired AI systems.

Despite their promise, challenges remain in memristor technology, including variability in device fabrication, limited endurance, and the need for optimized software toolchains and AI models compatible with analog computation. However, with ongoing research and growing industry investment, these obstacles are steadily being addressed.

In the near future, memristor-based AI accelerators are poised to become a cornerstone of next-generation computing, driving innovations in low-power, high-performance intelligent systems across all sectors of technology.

In this article, we explore the principles behind memristor technology, their application in AI accelerators, key benefits and challenges, real-world implementations, and the potential future of this groundbreaking technology.

What are Memristors?

Memristors are a type of non-volatile memory that retain resistance states even when power is turned off. Predicted theoretically by Leon Chua in 1971 and later physically realized by HP Labs in 2008, memristors form the fourth fundamental passive circuit element, complementing resistors, capacitors, and inductors.

They work by modulating electrical resistance based on the history of current that has passed through them—essentially remembering their past states. This property enables them to store data and perform computations simultaneously, setting the stage for in-memory computing.

Key Features of Memristors

- Non-volatility: Memristors retain information without continuous power.

- Low energy consumption: Reduced energy required for read/write operations.

- High density: Suitable for dense packing on chips, enabling compact accelerators.

- Scalability: Compatible with CMOS fabrication processes.

- Analog computation: Naturally suited for analog operations like vector-matrix multiplication.

Why Use Memristors for AI Acceleration?

AI workloads, particularly deep neural networks (DNNs), involve massive matrix multiplications and frequent memory accesses. Traditional digital architectures suffer from the von Neumann bottleneck—a limitation where the separation of memory and processing units results in latency and power inefficiency.

Memristor-based AI accelerators address this challenge by:

- Bringing computation closer to data (in-memory computing)

- Reducing data movement between processing and storage

- Enabling massive parallelism for matrix operations

These features significantly speed up inference and training while reducing power consumption—key requirements for AI at the edge and in data centers.

Architecture of Memristor-Based AI Accelerators

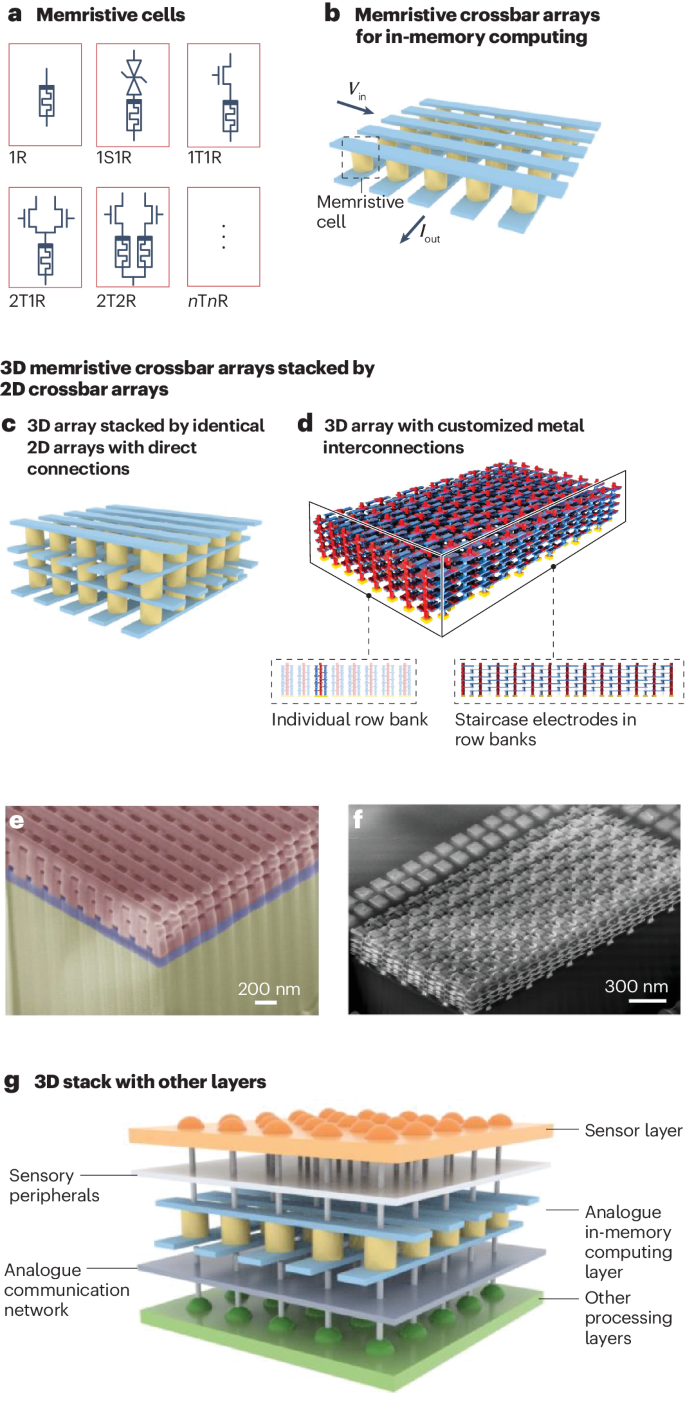

1. Crossbar Arrays

At the heart of memristor-based accelerators lie crossbar arrays—a grid-like architecture where memristors are placed at the intersection of perpendicular wires. These arrays naturally implement vector-matrix multiplications, a foundational operation in neural networks.

- Input voltages are applied along rows

- Currents generated across columns represent multiplication results

Crossbars allow for parallel, analog computation of neural network layers with incredible efficiency.

2. Analog-to-Digital (ADC) and Digital-to-Analog Converters (DAC)

Since most data input/output remains digital, ADCs and DACs are integrated to interface with the analog memristor arrays. These elements convert data between formats with minimal overhead.

3. Peripheral Control Circuits

Control units manage weight updates, voltage levels, timing, and integration with external systems, forming a hybrid analog-digital system.

4. Multilayer and 3D Stacking

Advanced designs use multilayered or vertically stacked memristor arrays to further increase computational density and efficiency.

Types of Memristor Materials

Memristor performance varies by material composition. Common types include:

- Resistive RAM (RRAM): Uses metal oxides like TiO₂

- Phase Change Memory (PCM): Changes between crystalline and amorphous states

- Spintronic Memristors: Utilize magnetic resistance

- Ferroelectric Memristors: Leverage ferroelectric polarization for resistance tuning

Each material has trade-offs in speed, retention, endurance, and fabrication complexity.

Benefits of Memristor-Based AI Accelerators

1. In-Memory Computation

Reduces data transfer latency and energy consumption by computing directly where data resides.

2. Energy Efficiency

Ideal for edge AI and mobile devices with limited power budgets. Some accelerators consume less than a few milliwatts during inference.

3. Massive Parallelism

Memristor arrays perform thousands of operations in parallel, significantly speeding up AI computations.

4. Compact Form Factor

High-density integration leads to smaller chips, beneficial for embedded and wearable devices.

5. Low Latency

Analog processing enables near-instantaneous response times, critical for real-time applications.

6. Scalability

Compatible with existing CMOS fabrication lines, enabling scalable manufacturing.

Challenges in Memristor-Based AI Acceleration

1. Device Variability

Analog memristor operations are inherently sensitive to external factors like electrical noise, temperature fluctuations, and manufacturing inconsistencies. These variations can lead to unpredictable behavior, affecting the reliability and repeatability of AI computations. Robust calibration and compensation techniques are necessary to ensure consistent performance across devices and operating conditions.

2. Limited Precision

Memristors typically support lower bit precision compared to digital systems, often operating in analog or quantized states. While suitable for many inference tasks, this limited precision can degrade the performance of complex AI models requiring fine-grained weight updates or detailed calculations, posing a challenge for broader deployment in demanding applications.

3. Endurance and Retention

Like flash memory, memristors degrade with repeated write cycles, leading to potential reliability issues over time. Data retention, especially in variable environments, can also be problematic. Ensuring sufficient endurance and stability requires advances in materials science and architectural design to make memristor-based systems viable for long-term AI applications.

4. Training Compatibility

Backpropagation and precise weight updates required for training deep neural networks are difficult to implement directly on memristor arrays. The non-linear, stochastic behavior of memristors complicates gradient-based learning. Researchers are exploring alternative learning methods, but most current implementations still rely on digital pre-training followed by memristor-based inference.

5. Conversion Overhead

Transitioning AI models from digital frameworks like TensorFlow or PyTorch to analog-compatible formats involves substantial conversion and optimization efforts. New toolchains must bridge the gap between software and hardware, handling quantization, mapping, and analog constraints. This conversion process can introduce inefficiencies and slow adoption without streamlined development workflows.

Real-World Applications

1. Edge AI and IoT Devices

Battery-powered devices benefit from memristor-based inference engines that operate with minimal power.

2. Autonomous Vehicles

Accelerate sensor fusion, object detection, and real-time decision-making at the edge.

3. Healthcare and Biomedical Devices

Implantable or portable diagnostic tools using memristor accelerators offer real-time data analysis without requiring cloud processing.

4. Industrial Automation

Smart factories and predictive maintenance systems use memristor-based AI to process data locally, reducing latency.

5. Security and Surveillance

Enables always-on vision and audio analysis with minimal power consumption.

Leading Research and Industry Players

1. HP Labs

Pioneers in memristor technology, developing neuromorphic systems and crossbar architectures.

2. IBM Research

Explores phase-change memory and hybrid analog-digital systems for AI applications.

3. Intel

Neuromorphic research through the Loihi chip includes elements that simulate memristive behavior.

4. Knowm Inc.

Focuses on commercializing memristor-based neuromorphic computing platforms.

5. Tsinghua University and MIT

Academic leaders in memristor device modeling, crossbar design, and AI system integration.

Future Outlook

1. Hybrid Systems

Combining memristors with traditional digital and analog processors allows for workload-optimized computing. While memristors handle parallel, low-power AI inference efficiently, digital systems manage control logic and precision tasks. This synergy maximizes performance and flexibility, paving the way for scalable, adaptable hardware tailored to diverse AI applications across devices and platforms.

2. Analog Training Algorithms

Current AI models are typically trained on digital hardware and then mapped onto memristor arrays for inference. Emerging research in analog training algorithms aims to enable direct learning on memristor hardware, reducing energy and time costs. This could unlock fully autonomous, low-power AI systems capable of continual learning at the edge.

3. Robust Toolchains

For memristor-based AI to achieve mainstream adoption, comprehensive software support is essential. This includes compilers, model converters, simulators, and debugging tools tailored to memristor architectures. Robust toolchains will bridge the gap between software developers and hardware designers, streamlining deployment and fostering wider experimentation and innovation in the ecosystem.

4. AI at the Edge Expansion

Memristor accelerators are ideally suited for edge AI applications due to their compact size and ultra-low power usage. As edge computing expands into areas like autonomous drones, wearables, and smart sensors, memristors will play a critical role in enabling real-time decision-making and AI-powered autonomy in resource-constrained environments.

5. Standardization and Ecosystem Growth

Widespread adoption of memristor AI accelerators requires collaboration across academia, industry, and standards organizations. Establishing common design frameworks, interface protocols, and performance benchmarks will drive interoperability and reduce development friction. A mature ecosystem will encourage investment, accelerate innovation, and promote reliable, scalable integration of memristor technology across sectors.

Conclusion

Memristor-based AI accelerators represent a groundbreaking advancement in the pursuit of efficient, scalable computing for the artificial intelligence (AI) era. These devices mark a fundamental shift in hardware architecture by merging memory and processing into a single element, effectively overcoming the limitations of traditional von Neumann systems where separate memory and processing units result in latency, energy inefficiencies, and data bottlenecks. By integrating computation directly into memory arrays, memristors significantly reduce data transfer requirements—dramatically improving performance, lowering power consumption, and enhancing the overall efficiency of AI processing.

This architectural innovation is especially critical as AI workloads become increasingly data-intensive and pervasive across industries, from autonomous vehicles and robotics to IoT, healthcare, and wearable technologies. Memristor-based accelerators excel in matrix-heavy operations, such as those required for deep learning inference, by allowing for highly parallelized and analog computation. This means large-scale neural networks can be processed faster and with less energy compared to conventional digital processors like GPUs and CPUs.

One of the most promising applications of memristor technology is in edge and embedded AI systems, where constraints on power, space, and cooling make traditional hardware impractical. Memristors offer a compact and energy-efficient solution that can bring real-time intelligence to mobile devices, sensors, drones, and other embedded platforms. Their non-volatile nature also allows them to retain data without continuous power, further optimizing energy usage for devices operating intermittently or in remote locations.

Despite their immense promise, memristor-based AI accelerators are not without challenges. Issues such as device variability, limited precision, endurance degradation over time, and difficulties in large-scale integration still need to be addressed. Furthermore, the development of analog-compatible AI models and toolchains remains an ongoing area of research, requiring collaboration between hardware engineers, computer scientists, and software developers.

Nonetheless, the momentum behind memristor innovation is growing rapidly. Academic research, government funding, and private-sector investment are converging to push the technology toward commercialization. As these challenges are progressively resolved, memristors are set to become a foundational component of future AI hardware ecosystems.

Memristor-based AI accelerators offer a unique blend of low-power consumption, high-speed performance, and compact size, making them ideal for next-generation intelligent devices. This capability paves the way for smarter, more responsive technologies that can operate efficiently in edge environments. By reducing energy demands and hardware footprints, these systems support environmentally sustainable innovation while enabling seamless integration of AI into everyday applications, from smart wearables to autonomous machines and IoT ecosystems.