Explainable AI (XAI) and Model Interpretability: Enhancing Trust in Artificial Intelligence

Uncategorized

Artificial Intelligence (AI) is increasingly being used in critical applications such as healthcare, finance, and autonomous systems. However, the ‘black-box’ nature of many AI models raises concerns about their decision-making processes. Explainable AI (XAI) aims to make AI models more transparent, interpretable, and accountable, ensuring that users can understand and trust AI-driven decisions. Model interpretability is crucial for debugging AI systems, complying with regulatory standards, and fostering user confidence in AI-driven applications.

For students aspiring to become AI specialists, understanding XAI is essential. Enrolling in the Best college in Haryana for B.Tech. (Hons.) CSE provides students with advanced knowledge in AI ethics, model interpretability techniques, and hands-on experience with real-world AI applications.

Artificial Intelligence (AI) has become an integral part of various industries, from healthcare and finance to autonomous systems and customer service. However, as AI systems become more sophisticated and widely adopted, concerns regarding their decision-making processes have grown. Many AI models, particularly deep learning and complex machine learning algorithms, function as “black boxes,” making it difficult to understand how they arrive at their conclusions. This lack of transparency has raised ethical, legal, and trust-related concerns, leading to the emergence of Explainable AI (XAI) and model interpretability as crucial fields of research.

Explainable AI (XAI) refers to a set of methods and techniques that provide human-understandable explanations of AI model decisions. The goal of XAI is to ensure that AI systems operate transparently, making them more trustworthy, accountable, and fair. Model interpretability complements XAI by focusing on understanding and explaining the inner workings of AI models to developers, regulators, and end-users.

The Importance of Explainable AI and Model Interpretability

- Building Trust and Adoption: Trust is fundamental to the widespread acceptance of AI technologies. Users and stakeholders need to understand how AI models arrive at their conclusions, especially in high-stakes industries like healthcare and finance. If AI-generated recommendations or decisions can be explained in a comprehensible manner, users are more likely to trust and adopt these systems.

- Ensuring Fairness and Mitigating Bias: AI models trained on biased data can produce discriminatory outcomes. Explainable AI techniques help in identifying and mitigating bias by providing insights into how data influences AI decisions. By making AI models interpretable, organizations can ensure fairness and comply with ethical standards.

- Regulatory Compliance and Legal Implications: Regulatory bodies worldwide are emphasizing the need for transparency in AI systems. Regulations such as the European Union’s General Data Protection Regulation (GDPR) mandate that users have the right to an explanation regarding automated decisions that impact them. XAI helps organizations meet these legal requirements by providing interpretability and justification for AI-driven decisions.

- Improving Model Performance and Debugging: AI developers and data scientists need to understand model behavior to fine-tune and optimize performance. Explainability tools assist in debugging AI models, diagnosing errors, and enhancing reliability. By understanding the inner workings of AI, researchers can create more accurate and effective models.

Techniques for Explainable AI and Model Interpretability

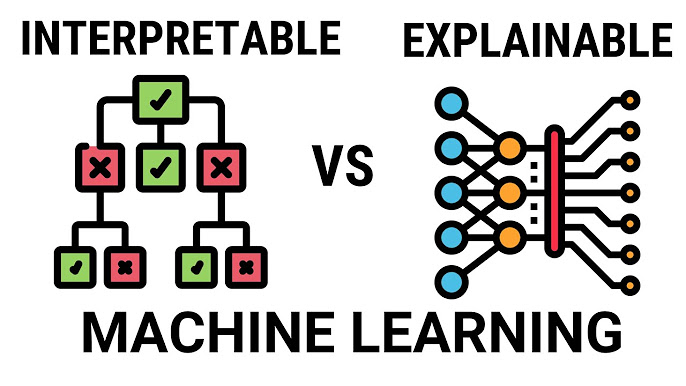

Several techniques have been developed to enhance the explainability of AI models. These techniques can be broadly categorized into intrinsic interpretability (built-in model transparency) and post-hoc interpretability (explanations generated after the model makes predictions).

- Intrinsic Interpretability Methods:

- Decision Trees: These models follow a hierarchical structure that makes it easy to trace decision paths.

- Linear and Logistic Regression: Due to their simple mathematical structure, these models are inherently interpretable and provide insights into feature importance.

- Rule-Based Models: Models such as association rule learning provide explicit rules that explain AI decisions.

- Post-Hoc Interpretability Methods:

- Feature Importance Analysis: Techniques like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) provide insights into how different input features influence AI predictions.

- Partial Dependence Plots (PDPs): These plots illustrate the relationship between a specific feature and the AI model’s output while keeping other variables constant.

- Surrogate Models: Simple models, such as decision trees, can be trained to approximate complex models and provide explanations.

- Visualization Techniques: Heatmaps, saliency maps, and activation maps are commonly used to interpret deep learning models, particularly in image recognition applications.

Challenges in Implementing Explainable AI

Despite its importance, implementing XAI comes with several challenges:

- Trade-off Between Accuracy and Interpretability: Complex AI models, such as deep neural networks, often achieve higher accuracy than interpretable models like decision trees. Balancing performance with explainability remains a significant challenge.

- Scalability Issues: Some explainability techniques, such as SHAP, require extensive computational resources, making them impractical for real-time applications with large datasets.

- Standardization and Evaluation: There is no universal standard for measuring explainability, making it difficult to assess and compare different XAI techniques across industries.

- Human-Centric Explanations: AI explanations need to be meaningful to diverse users, from technical experts to non-technical stakeholders. Creating explanations that are both accurate and user-friendly is an ongoing challenge.

The Future of Explainable AI

As AI continues to evolve, research in explainability will focus on several key areas:

- Hybrid Approaches: Combining multiple explainability techniques can offer more comprehensive insights into AI models. Future advancements may integrate rule-based systems with deep learning interpretability techniques.

- AI Ethics and Responsible AI: Organizations are increasingly emphasizing AI ethics to ensure responsible AI deployment. Future AI models will be designed with built-in transparency and fairness considerations.

- Regulatory Frameworks and Policies: Governments and regulatory bodies will continue to refine AI transparency laws, ensuring that AI models are accountable and interpretable.

- Human-in-the-Loop AI: AI systems will increasingly incorporate human feedback into their learning processes, allowing for more interactive and interpretable decision-making.

The Need for Explainable AI in Modern Applications

AI systems are becoming integral to decision-making processes in fields like medical diagnostics, credit scoring, and autonomous driving. However, many AI models, particularly deep learning networks, operate as ‘black boxes,’ meaning their internal decision-making processes are opaque. This lack of transparency raises several concerns:

- Trust and Adoption: Users may be reluctant to rely on AI systems if they cannot understand how decisions are made.

- Regulatory Compliance: Many industries require explainability to meet ethical and legal guidelines, such as the General Data Protection Regulation (GDPR).

- Error Detection and Debugging: Understanding how an AI model arrives at a decision helps identify biases and improve performance.

To address these concerns, researchers and developers are focusing on techniques that make AI more interpretable while maintaining high performance.

Techniques for Model Interpretability

There are various techniques used to make AI models more interpretable, including:

- Feature Importance Analysis: Identifying which features in the input data have the most impact on the model’s predictions.

- Local Interpretable Model-agnostic Explanations (LIME): Generating approximate models to explain specific predictions.

- Shapley Additive Explanations (SHAP): Quantifying the contribution of each feature to the model’s output.

- Attention Mechanisms: Used in deep learning models to highlight which parts of the input data were most relevant to a decision.

- Decision Trees and Rule-Based Models: Providing explicit, human-readable decision-making paths.

Students at the Best college in Haryana for B.Tech. (Hons.) CSE learn to implement these techniques in AI projects, ensuring they can build AI systems that are both powerful and interpretable.

XAI in Healthcare, Finance, and Cybersecurity

Explainable AI is especially critical in sensitive industries where decisions can have significant consequences:

- Healthcare: AI models assist in diagnosing diseases, but doctors and patients must understand how predictions are made. XAI techniques help ensure transparency in medical decision-making.

- Finance: Loan approvals and fraud detection systems rely on AI models. XAI helps regulators and customers understand the rationale behind financial decisions.

- Cybersecurity: AI-driven security systems detect threats, and XAI helps security analysts interpret alerts and respond effectively.

Challenges and Future of Explainable AI

Despite its benefits, XAI faces several challenges, including balancing accuracy with interpretability, managing computational complexity, and addressing ethical concerns. As AI continues to evolve, research in XAI will play a crucial role in making AI systems more accountable and user-friendly.

The Best college in Haryana for B.Tech. (Hons.) CSE offers cutting-edge courses in Explainable AI, ensuring students are equipped with the skills needed to develop transparent and ethical AI systems. By mastering XAI, graduates can contribute to building AI solutions that are not only powerful but also trustworthy and responsible.

Explainable AI (XAI) and model interpretability are crucial in ensuring the transparency, fairness, and trustworthiness of AI systems. As AI becomes more embedded in critical decision-making processes, understanding how these models function is imperative for both technical and non-technical stakeholders. By adopting explainability techniques, organizations can enhance trust, ensure regulatory compliance, and create AI systems that align with ethical principles.

Despite challenges such as the trade-off between accuracy and interpretability, ongoing research and technological advancements are paving the way for more transparent AI models. As regulatory requirements evolve and AI ethics gain prominence, the future of AI will prioritize explainability, making AI systems more accountable and user-friendly.

By fostering a culture of transparency and ethical AI development, we can build AI systems that not only enhance technological capabilities but also inspire trust and confidence in their decisions. Furthermore, the implementation of XAI will facilitate AI’s seamless integration into high-stakes industries such as healthcare, finance, and criminal justice, ensuring that decisions are made with fairness and accountability.

For aspiring AI professionals, understanding XAI will become a fundamental skill, with institutions offering specialized courses to prepare students for the evolving demands of AI-driven industries. The shift towards interpretable AI models will also influence corporate policies, prompting businesses to invest in XAI research and development to maintain competitiveness and compliance with emerging regulations.

Ultimately, Explainable AI represents the next frontier in responsible AI development, where transparency and interpretability are not just desirable features but essential pillars of AI’s future. As we continue to refine AI models and improve their ability to provide understandable and meaningful explanations, we will unlock their full potential while ensuring they operate within ethical and legal boundaries. This transformation will redefine human-AI interactions, bridging the gap between innovation and trust, and solidifying AI’s role as a powerful tool for positive societal impact.